🛠️ Google's Offline AI Offensive: The New Toolkit for Developers & Enterprises

Why On-Device AI isn't Just a Gimmick—It's Google's Masterstroke for Privacy, Speed, and Pervasive Intelligence.

Walking into Reem Tech Pro this week, the buzz was palpable, and Google certainly delivered. Beyond the dazzling demos, a strategic shift is unfolding: a profound push towards on-device and offline AI. This isn't just about making AI run without the internet; it's a foundational move to redefine privacy, latency, and accessibility for a new generation of AI tools and resources.

This pivot means AI that’s faster, more personal, and potentially more secure, transforming everything from your smartphone to industrial robots.

Curious how these new tools can revolutionize your workflow?

Grab our free guide: 15 AI Prompts That Save You 10+ Hours a Week.

🔒 The Edge Revolution: AI That Stays Local

Google's latest announcements emphasize "edge AI"—processing data right where it's collected, rather than sending it to distant cloud servers. This approach powers several new tools designed to operate on-device and offline, promising significant benefits:

Enhanced Privacy: Data remains local, reducing privacy concerns associated with cloud data transfer.

Reduced Latency: Decisions and actions happen instantaneously without network delays, crucial for real-time applications.

Offline Functionality: AI becomes reliable even in areas with poor or no internet connectivity, opening up new markets and use cases.

This strategic move by Google is not merely a technical upgrade; it's a re-imagining of where and how AI delivers value, directly providing new tools and resources for developers to build smarter applications.

💬 Reem’s Take: The cloud defined the last decade of computing. The next? It’s the edge. Google isn't just shrinking models; they're expanding the reach of AI, democratizing its power beyond data centers and into every pocket and periphery. This is a subtle, but massive, power shift.

🚀 Google's New Arsenal: Gemma, Gemini Robotics, & Gemini CLI

The core of Google's on-device AI offensive lies in its new and updated models and developer tools:

Gemma 3n: This open-source, multimodal AI model is engineered to run entirely offline on devices with as little as 2GB RAM. Built on a new architecture called MatFormer, Gemma 3n supports over 140 languages and processes text, video, image, and audio content without cloud connection. It's a game-changer for privacy-sensitive and remote environments, offering unparalleled accessibility.

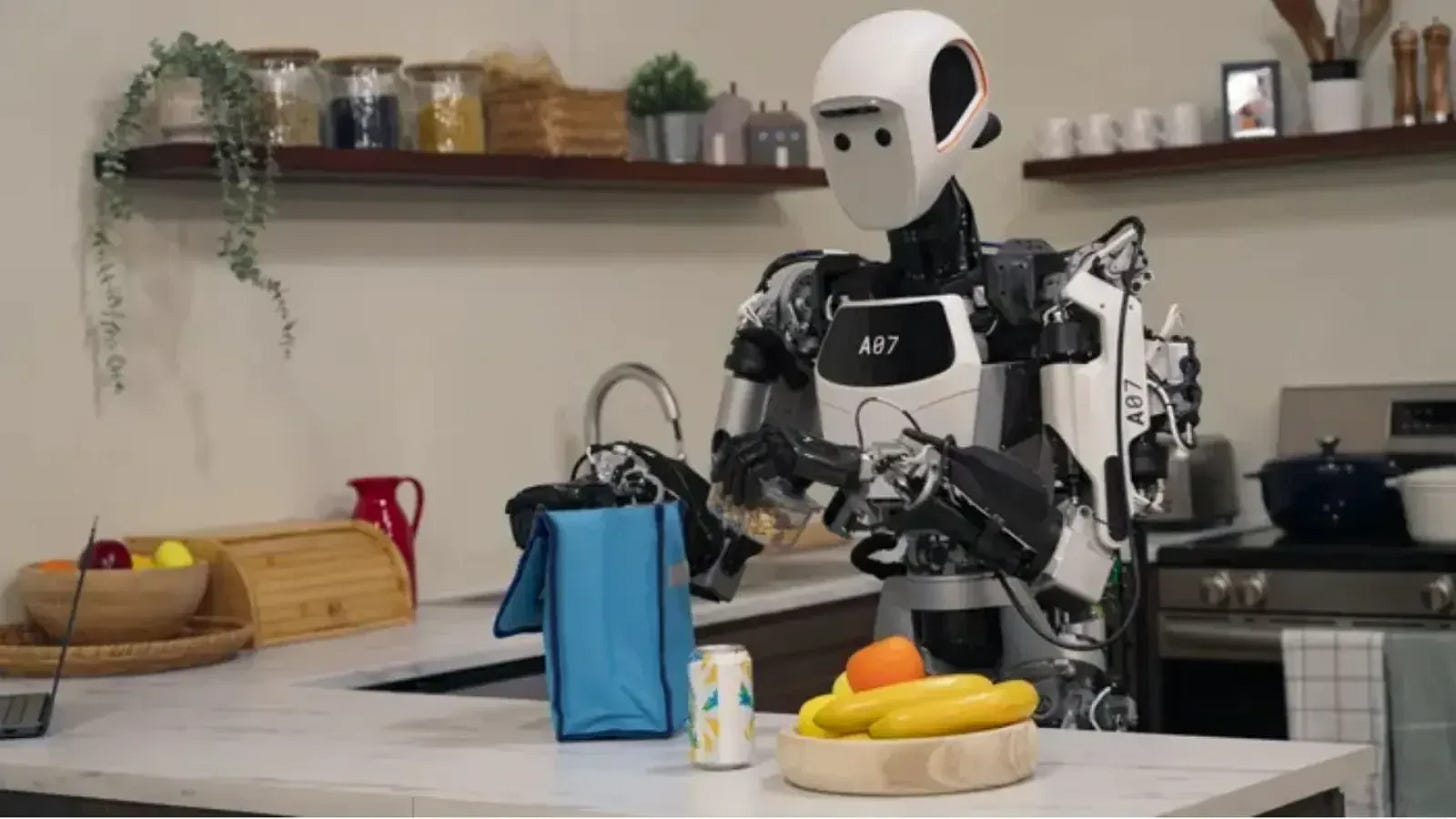

Gemini Robotics On-Device: Google DeepMind unveiled this lightweight AI model specifically designed to run locally on robots. Trained initially on ALOHA systems, Gemini Robotics On-Device can understand natural language, execute complex multi-step commands, and perform dexterous tasks like folding clothes—all without internet access.

Gemini CLI: For developers, Google launched Gemini CLI, a free, open-source command-line interface that embeds Gemini 2.5 Pro directly into developer terminals. This tool supports coding, debugging, content creation, and even multimedia generation, bringing powerful AI capabilities directly to the developer's workspace for seamless offline workflows.

These new offerings represent a formidable expansion of the AI "toolkit," making advanced AI accessible and deployable in a broader array of scenarios.

💬 Reem’s Take: Don't underestimate the "CLI" part. Google isn't just targeting consumers; they're arming the developers. A more powerful, local terminal means faster iteration, more private development, and potentially, a new wave of truly innovative AI-powered applications. This is how you win the developer wars.

📈 The Business Impact: Beyond the Hype

Keep reading with a 7-day free trial

Subscribe to Reem Tech Pro to keep reading this post and get 7 days of free access to the full post archives.