The AI Illusion: Inside the $40M Nate App Fraud That Shook Tech’s Trust

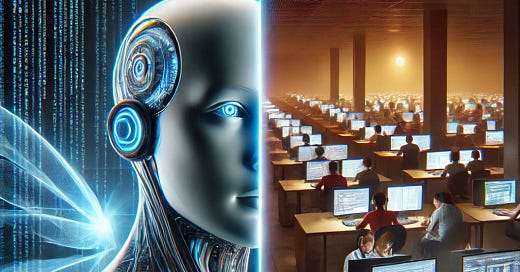

The Real Cost of AI-Washing—and What Every Executive Must Learn Now. Behind the facade of Nate’s AI promise stood a team of underpaid call center agents in the Philippines.

🤖 When AI Isn't Really AI

In the $40M Nate app scandal, the algorithm was… people. Literally. Former CEO Albert Saniger is now facing federal fraud charges after promoting a so-called “AI-powered” shopping assistant that, in reality, relied on call center workers in the Philippines.

Investors bought the hype. Consumers trusted the product. And the market? Shaken.

Reem’s Take 🧠: If AI is today’s gold rush, then AI-washing is the modern fool’s gold—and it's everywhere. This scandal isn’t just a one-off. It’s a red flag for an industry running too fast, with too little scrutiny.

💸 The Scam, Unpacked

Saniger pitched Nate as the future of e-commerce automation: a frictionless shopping assistant powered by machine learning. But prosecutors say:

No AI models were functioning as promised.

Remote workers performed all key tasks.

Over $42M was raised under false claims.

Reem’s Take 🎯:

Tech leadership isn’t about buzzwords—it’s about verified capability. If your AI can’t pass a blind test, don’t sell it. Period.

🚨 AI-Washing Is the New Vaporware

The Nate scandal has ignited fresh debate about AI-washing—the practice of inflating AI claims to boost valuations and visibility. Here’s what’s happening:

Startups are pitching pseudo-AI to raise capital.

Enterprises are onboarding fake tools, putting operations at risk.

Investors are losing trust, and regulators are circling.

Reem’s Take 🔍:

The next big AI crash won’t come from tech failure—it’ll come from trust failure. And when trust breaks, regulation follows.

🌍 Global Stakes: Why This Hits Harder Than It Looks

This isn’t just about one app—it’s a wake-up call for every sector betting on AI.

In Financial Services:

Risk: AI-driven credit scoring tools may rely on biased or opaque data, risking regulatory fines and reputational damage.

Move: Implement algorithmic audits and ensure explainability for compliance.

In Retail & E-commerce:

Risk: Overstated AI personalization features can mislead customers and investors alike.

Move: Verify automation claims and prioritize transparency in consumer-facing tech.

In Enterprise SaaS & HR Tech:

Risk: HR platforms claiming "AI recruiting" may simply automate basic filters, inviting discrimination risks.

Move: Demand clarity on what’s AI vs. rules-based logic, and ensure fairness testing is in place.

🧩 Fixing the Governance Gap

The Nate fiasco underscores a systemic flaw: no AI accountability standards.

What we need:

🔎 AI Fact-Labeling like nutritional labels for transparency

🧾 Proof-of-Performance Demos for all investor-backed AI tools

🛡️ Regulatory teeth, not just frameworks. Think EU AI Act, U.S. AI Bill of Rights—now with enforcement

Reem’s Take 💼:

Think of AI like medicine: powerful, promising, but deadly if mislabeled. It’s time we regulated accordingly.

🧠 Your Playbook: Vetting AI in the Wild

Here’s your executive cheat sheet to sniff out AI smoke:

✅ Ask for actual model demos—not slides.

✅ Demand performance data and user analytics.

✅ Watch out for secrecy cloaked as “proprietary.”

✅ Verify who (or what) is doing the work.

Reem’s Take 📊:

If you can’t explain the core engine of your AI in 90 seconds, you shouldn’t fund it—or use it.

✅ What Real AI Leadership Looks Like

Keep reading with a 7-day free trial

Subscribe to Reem Tech Pro to keep reading this post and get 7 days of free access to the full post archives.